Customer Service Software

Competitor Study

May 2023

Fortune 15 Information Technology Company

Company

Stakeholder

UX Researcher

Role

Lead UX Researcher, Supported by UXR Manager

Hybrid methods were applied to this study

Method

UserTesting, Microsoft Teams, Google sheets, Powerpoint, Mural

Tools

Timeframe

8 weeks

Study Overview

Research Objectives

The ‘Customer Service’ Product Team at this Fortune 15 Information Technology Company is investing heavily in the modern customer-centric agent experience, specifically supporting agent scenarios across all channels (including phone, chat, and emails) to provide quick and case-free issue resolutions.

The goals of this study were to help the Product Team understand:

how well their agent experiences benchmarks against leading competitors.

what features do leading product experiences offer to agents to allow them to efficiently accomplish essential tasks, and how can the product in question adopt them to be a market leader in usability.

Methodology

Method Overview

After conducting a kickoff call to grasp the stakeholders' objectives, I began determining the best methodology to use for this study. To capture insights from both competitor products and real agents' experiences with these products, hybrid methods were applied:

A Competitive Heuristic analysis to get an overall review of the product in question alongside its top three competitors, assessing each against established usability heuristics. Ultimately, this process aimed to pinpoint experience gaps among all four products, enabling the identification of opportunities for enhancement within the product in question.

In-depth interviews with the target persona.

A research workshop was facilitated by UserTesting with the cross-functional team to determine areas to prioritize and clear action items and next steps.

After determining the study methodology, my next step was to identify the competitors to include in the competitive heuristic analysis. This involved pinpointing key players through a blend of thorough market research and stakeholder consultation:

Conduct Market Research: I dedicated the day to researching the landscape of the customer relationship management (CRM) software market, to identify companies offering similar solutions to the product in question. I wanted to spot direct competitors targeting the same customer segments and addressing similar user needs and to uncover indirect competitors that might offer alternatives or address similar user needs.

2. Engage with Stakeholders: I sought input from the project stakeholder to understand their perspectives on competitors widely acknowledged or deemed significant in the industry. Additionally, I collaborated with internal team members, such as sales representatives, leveraging their valuable insights gained from interactions with customers and competitors in the market, to gather further viewpoints on key competitors.

To thoroughly examine each competitor, I opted to focus on a total of three:

Two direct competitors operating in the customer relationship management (CRM) and customer service software market. These competitors cater to a wide range of industries, including manufacturing, retail, financial services, healthcare, and professional services, and offer features such as case management, ticketing systems, knowledge bases, omnichannel support, analytics, and automation tools.

I selected Salesforce Service Cloud and Zendesk due to their direct competition with the product in question, offering similar features and functionalities tailored to meet the needs of businesses seeking effective customer service management solutions. All three platforms emphasize customer service management, omnichannel support, customization and integration, and a focus on enhancing the overall customer experience.

Additionally, I included one indirect competitor that addresses similar customer needs or business challenges. Though not operating in the same market segment, this competitor provides alternative solutions that customers might consider when managing customer interactions and improving support processes.

I selected HubSpot, which focuses primarily on marketing and sales automation. While HubSpot's primary emphasis differs from that of the product in question, it does provide customer service features enabling businesses to handle customer inquiries, track interactions, and offer support.

In order to address the objectives of this study, which were to understand how well the agent experiences benchmarks against leading competitors and to identify areas of issues, it was necessary to ensure that the heuristic evaluation centered on the essential user journeys within modern customer-centric agent experience.

It was imperative for me to grasp the Agent Persona, a task facilitated by insights gleaned from a prior study I conducted on this product, coupled with findings from another researcher's work. The stakeholder provided a brief detailing the Agent Persona derived from these two studies, encompassing details such as common channels used to communicate with customers, average daily workload in terms of cases handled, and a comprehensive journey map delineating the steps agents undertake to resolve various customer issues across different scenarios.

I divided the Agent workflow into different stages including Triage, Contact, Research, Trouble Shoot, Resolve, Conclude, and Wrap-up. I then turned each of these different stages into tasks that would be used as tasks in the Heuristic Evaluation, such as in the example below.

Stage: Contact

Task: Customer Carmen Smith sends an email stating they are having an issue with a product they have purchased.

Stage: Research

Task: Has Customer Carmen Smith contacted us before? What have they contacted us about? Have they had this same issue in the past?

A task was created for each stage of the Agent workflow, resulting in a total of 7 tasks.

Heuristic Evaluation Outcome

Findings

The analysis from the Heuristic Evaluation validated that there are several areas where the current product does extremely well in supporting the agent experience that lends itself as a leader in usability, yet areas of opportunity were identified.

As you can see from the callout above:

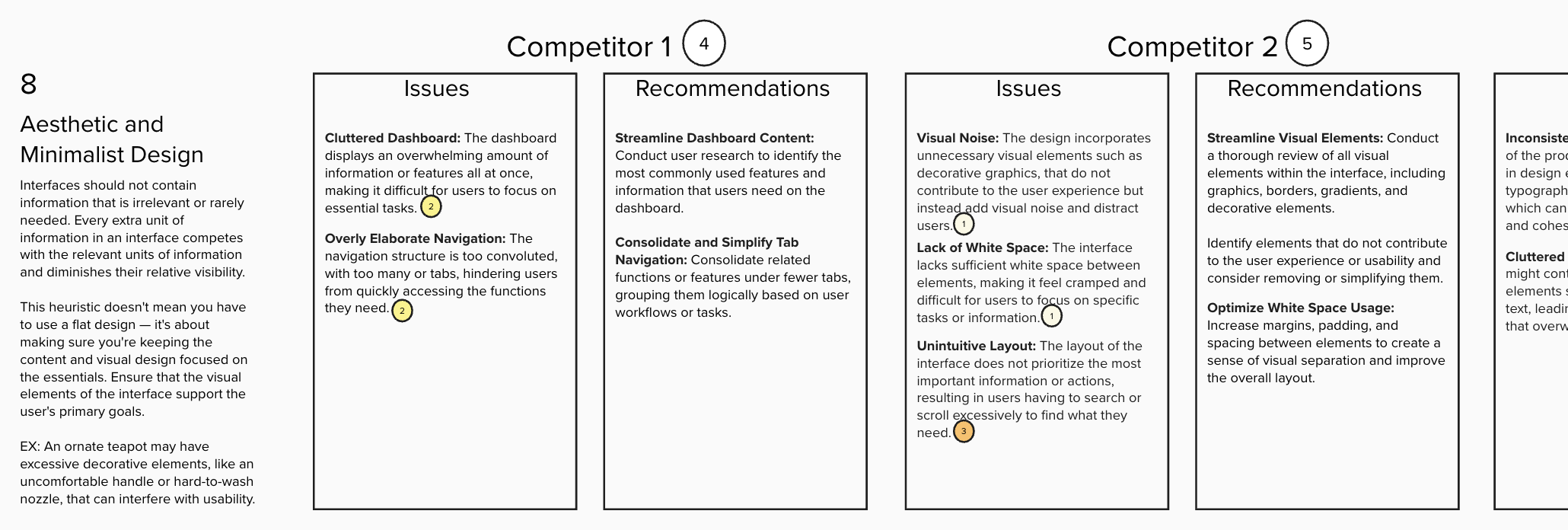

The product in question distinguished itself from competitors in terms of Heuristic Principles 4 and 8 (Consistency and Standards, and Aesthetic and Minimalist Design). Notably, while most competitor products opened tasks in new windows, resulting in agent overload and inefficiency due to an excess of open tabs, the current product showcased a more cohesive user experience. It seamlessly integrated agent tasks and tools into a unified space across the platform, promoting consistency and enhancing usability.

The product in question exhibited a higher severity score for Heuristic Principle 7 (Flexibility and Efficiency of Use). A significant finding from the Heuristic Evaluation was the absence of automation features in the current product. While all four products enhance agent productivity by utilizing natural language processing to suggest features such as quick replies or templates, the current product lacked more modern shortcut functionalities like tagging. Consequently, delving deeper into this aspect of automation was a priority for exploration during the in-depth interviews.

Key Findings slide from Report Deck

PART 3: Research Workshop

The final deliverable deck was shared with the main stakeholder as well as the larger ‘Customer Service’ Product Team to allow them to review findings ahead of our Research Workshop.

I led a 90-minute workshop with the 'Customer Service' Product Team, aiming to assist them in identifying priority areas and establishing actionable items and next steps.

Jakob Nielsen's 10 Usability Heuristics

After getting trial accounts to all the products being assessed, I met with my supporting researcher to ensure a mutual understanding of the tasks and heuristics designated for evaluating each product.

We would each be evaluating all 4 products independently and had a maximum of six hours to do so. During the evaluation of each product, we would assume the role of a Customer Service Agent, and work through each of the seven tasks created for each stage of the Agent workflow.

To ensure thoroughness in our evaluations, we established the following process for each product:

First we went through each task once just to learn the system, without attempting to evaluate anything. This initial step was crucial due to our unfamiliarity with the products.

Once we felt sufficiently acquainted with the product, we transitioned to assuming the role of an Agent and revisited each task. During this second pass, our focus shifted towards identifying design elements, features, or decisions that violate one of the 10 heuristics.

We made note of any usability issues or violations of heuristic principles encountered during the evaluation, making sure to describe each issue clearly, including its impact on user experience, which was determined by a severity scale we agreed upon (see screenshot below). Lastly, we made sure to include any potential recommendations for improvement.

Scale used to determine the severity of usability issue

After completing our individual assessments, we centralized our findings by inputting them onto a virtual mural board. This board provided a designated area for documenting all the issues identified within each heuristic across all four products/ competitors. The synthesis process included removing any duplicate findings and arranging the issues by the severity rating of each issue. Through this process, we gained valuable insights into the comparative usability performance of each product. This enabled us to pinpoint which product showcased the greatest number of heuristic violations and provided clear visibility into its overall usability standing.

Utilizing affinity mapping, which involved clustering similar issues on our virtual whiteboard, proved instrumental in identifying shared usability concerns across all products. This process effectively highlighted opportunities for enhancing the competitive edge of the product.

PART 2: In-Depth Interviews

The analysis derived from the in-depth interviews reaffirmed that the product in question lacks many of the modernized experience features that competitor products possess, specifically with the automation feature findings that came from the Heuristic Evaluation.

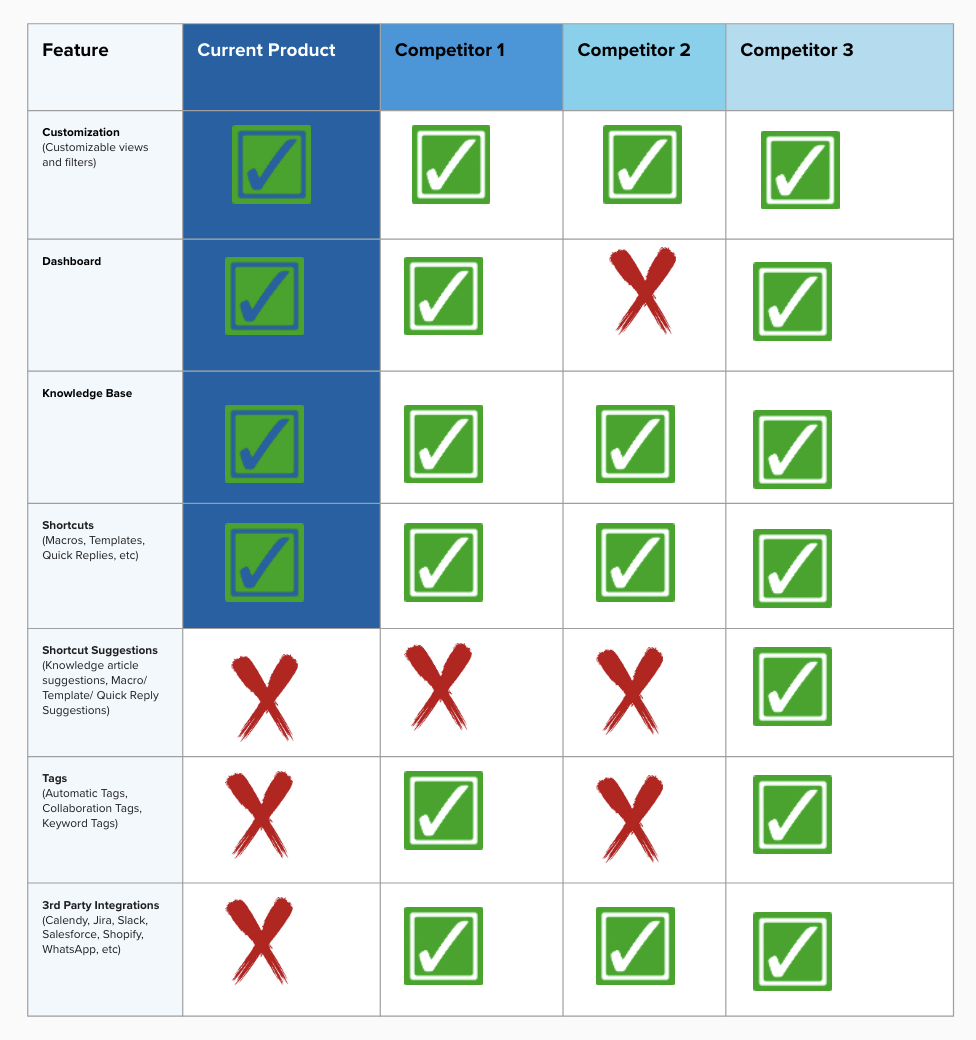

In the report, I first wanted to provide a clear high-level overview of features through a comparison chart.

Example of Feature Comparison Chart

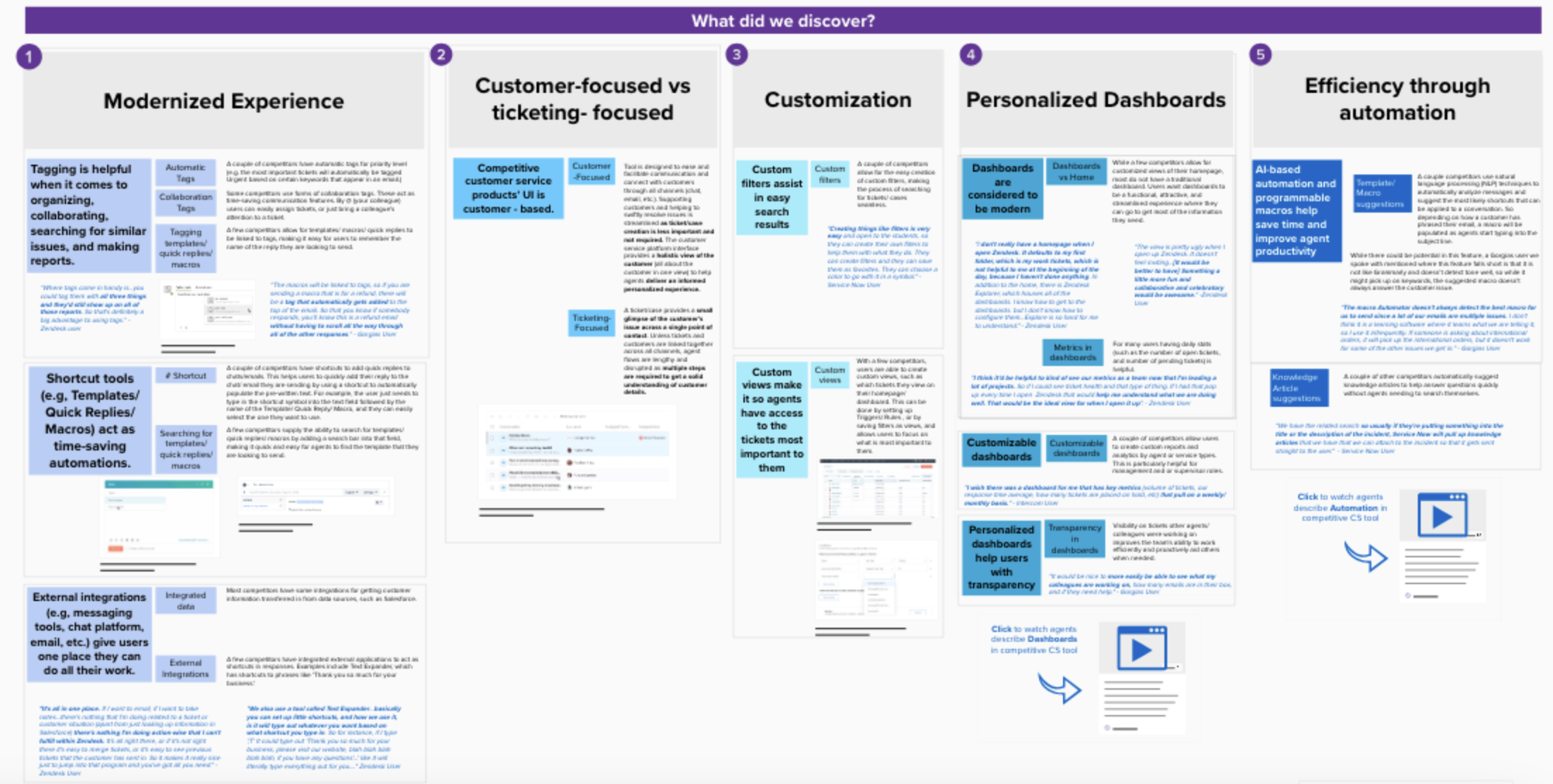

For the remainder of the Research Report, my objective was to integrate the insights from the heuristic evaluation with the rich qualitative data obtained from the in-depth interviews. The detailed findings slides were organized into five thematic categories derived from the affinity mapping process. These categories encompassed modernized experience, customer-focused versus ticketing-focused approaches, customization, personalized dashboards, and efficiency through automation.

Each slide aimed to provide a recommendation and a direct participant quote.

Example of Slides from the Report Deck

The feature comparison chart revealed that:

While the product in question offers numerous features aiding agents in efficiently completing essential tasks,

t The product falls short in some areas compared to competitor products.

Addressing these gaps by incorporating similar features and enhancing them to surpass competitors' offerings is imperative to become a market leader.

For example, all the competitor products allow for 3rd party integrations including messaging tools, chat platforms, email, calendars, etc, while the current product does not. The company that makes the product in question is a leader in developing software solutions that automate workflows to improve productivity. Most customers want a fast and intuitive tool that can integrate well with other tools and give them one place where they can do all their work. Finding a way to integrate some of their other internal products into this one could help make it a one-stop-shop agents are looking for.

Example of violations against Heuristic 8 including issues and recommendations from Mural board.

While I had already gathered usability feedback on the product in question from a previous benchmark study, I aimed to delve deeper into understanding competitor products by leveraging customer insights through in-depth interviews.

I facilitated 60-minute discovery interviews on the UserTesting platform, where participants were prompted to share their experiences using competitor products.

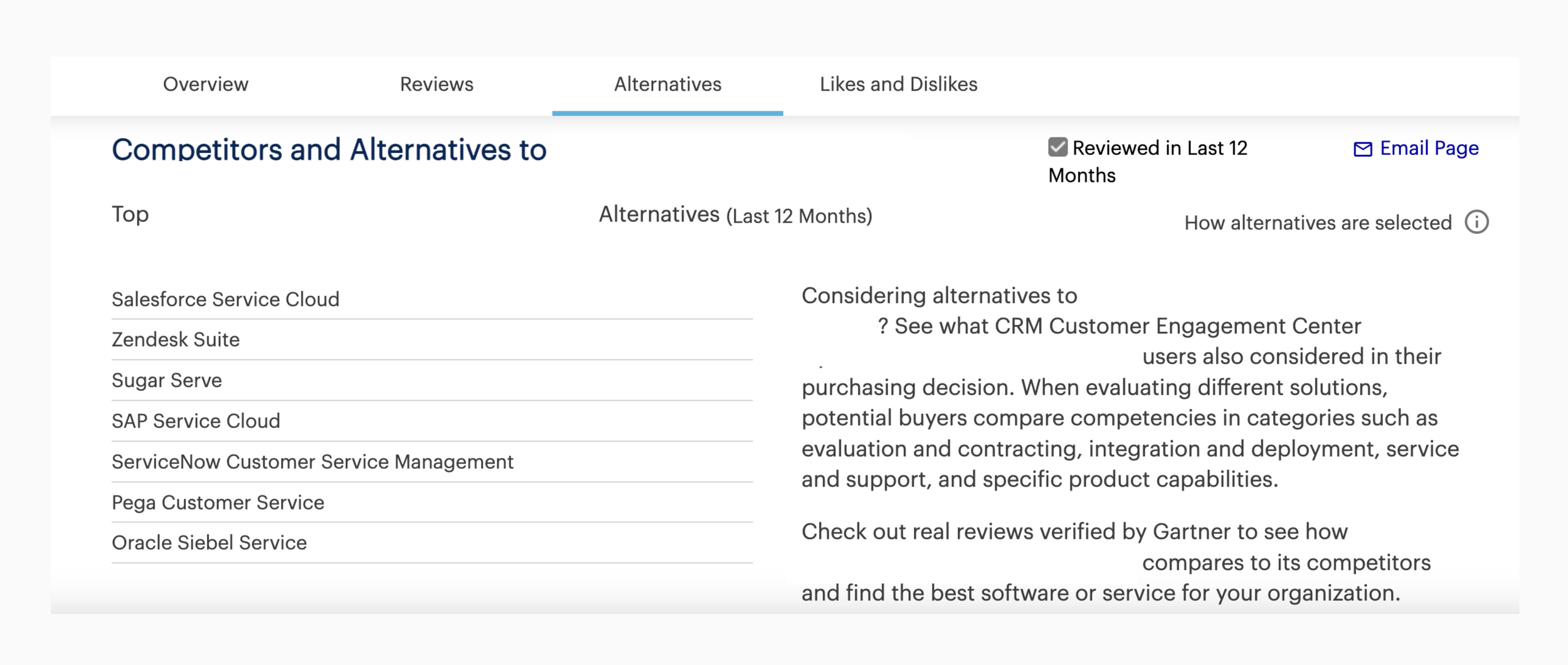

List of competitors and alternative products provided by Gartner.

(Product and Vendor alternatives were selected from other vendors/products within the market by using a combination of user responses to the review question “Which other vendors did you consider in your evaluation?” and total review volume for those vendors/products within the market.)

Defining the Heuristics

Having outlined the tasks to focus the heuristic evaluation on key user journeys within the modern customer-centric agent experience, my next step was to establish the criteria, or heuristics, against which I would evaluate each competitor's product.

For this assessment, I opted to employ all ten of Jakob Nielsen’s heuristic principles.

My Process

Analysis

Defining the Scope

PART 1: Competitive Heuristic Evaluation

Identifying Competitors

The Competitors

Method

Recruitment Details

I began creating screener questions to screen participants through UserTesting.com using the following criteria:

Work part or full-time in a customer service role.

Use at least one of the competitor products in their role at work.

I settled on a total of five participants, which would allow me to identify recurring themes or patterns in user behavior, preferences, and pain points.

Test Details

The discovery sessions were conducted in a conversational manner, devoid of any mockups or additional stimuli. Instead, they followed a flexible sequence:

Introduction + Warm-Up

Competitor Product Experience

Their everyday usage of the product/s

Positives of using the product/s

Challenges or Pain Points of using the product/s

How they would change the product/s if given a magic wand

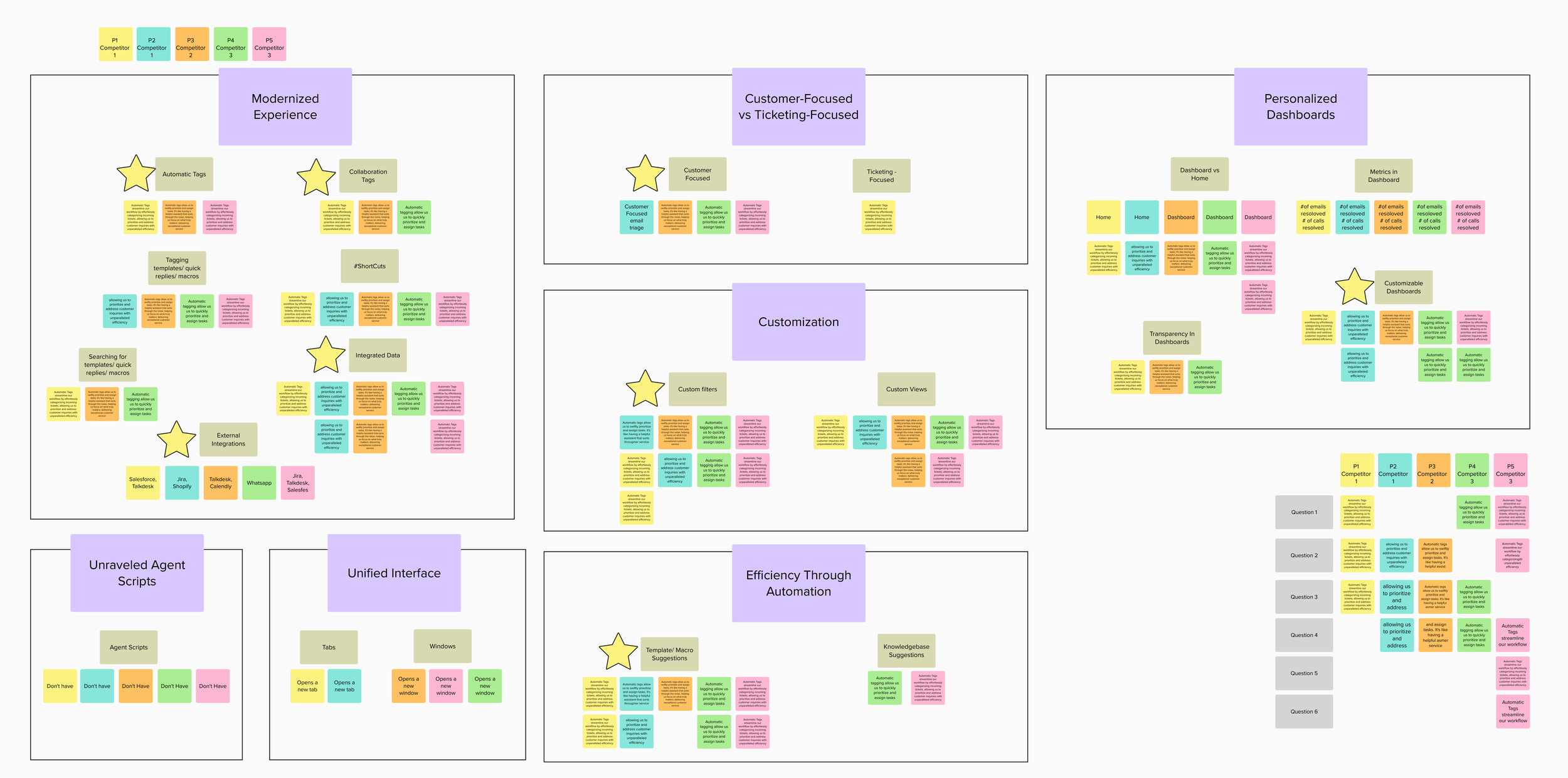

For this phase of the study, I once again utilized Mural. Each session was annotated, with participant quotes and sentiments documented on individual sticky notes within a Mural Board.

Analysis

Working alongside my supporting researcher, we engaged in an affinity mapping exercise to consolidate common themes. Subsequently, this process facilitated the structuring of my report around thematic finding categories, including modernized experience, customer-focused versus ticketing-focused experience, customization, personalized dashboards, and efficiency through automation.

As illustrated in the screenshot of the mural board:

Each participant was assigned a distinct color. This approach facilitated the visualization of not only each participant's contributions but also which competitors possessed (or lacked) specific features.

With these features in mind, I marked the areas where the product in question was currently lacking with a star, aiding in the visualization of potential areas for improvement.

Example of affinity mapping of annotations from moderated studies (in Mural)

Overall Outcome

Comparison Chart

Detailed Findings Slides

Method

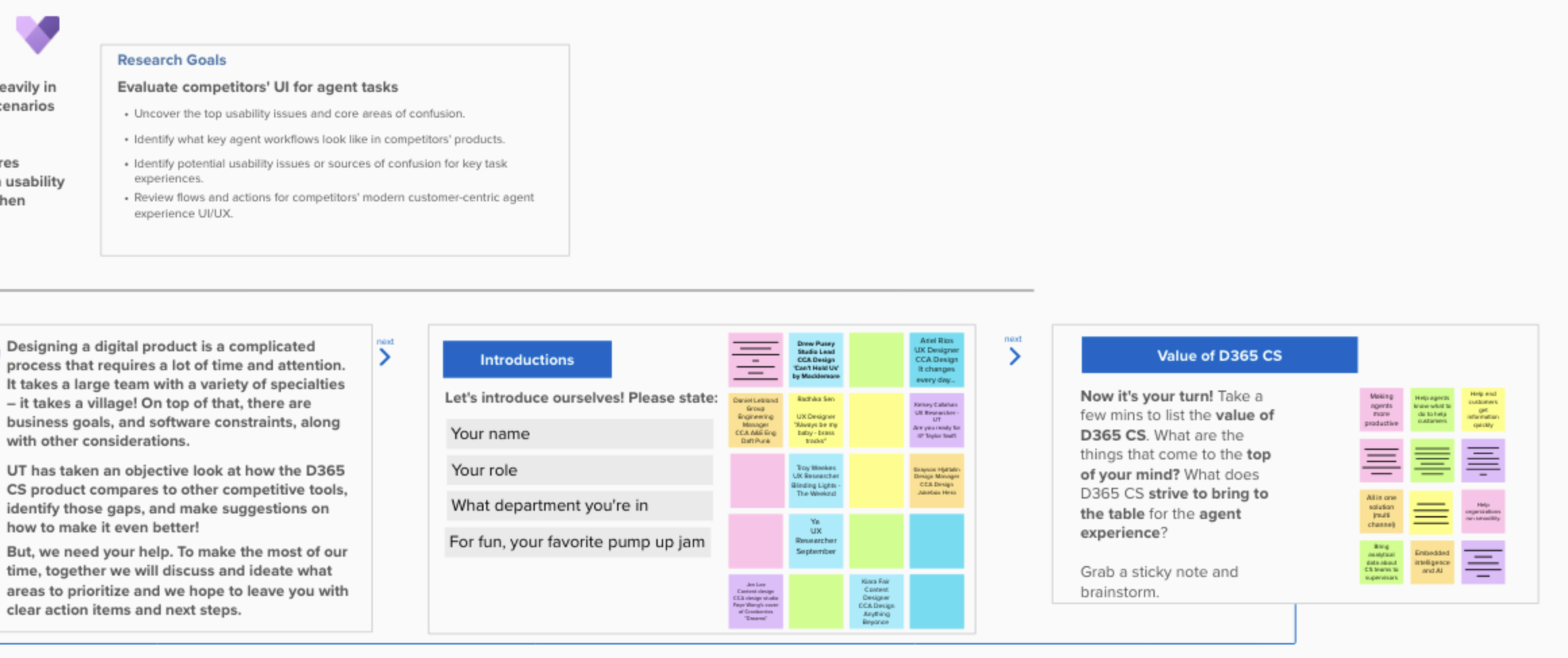

Workshop Details

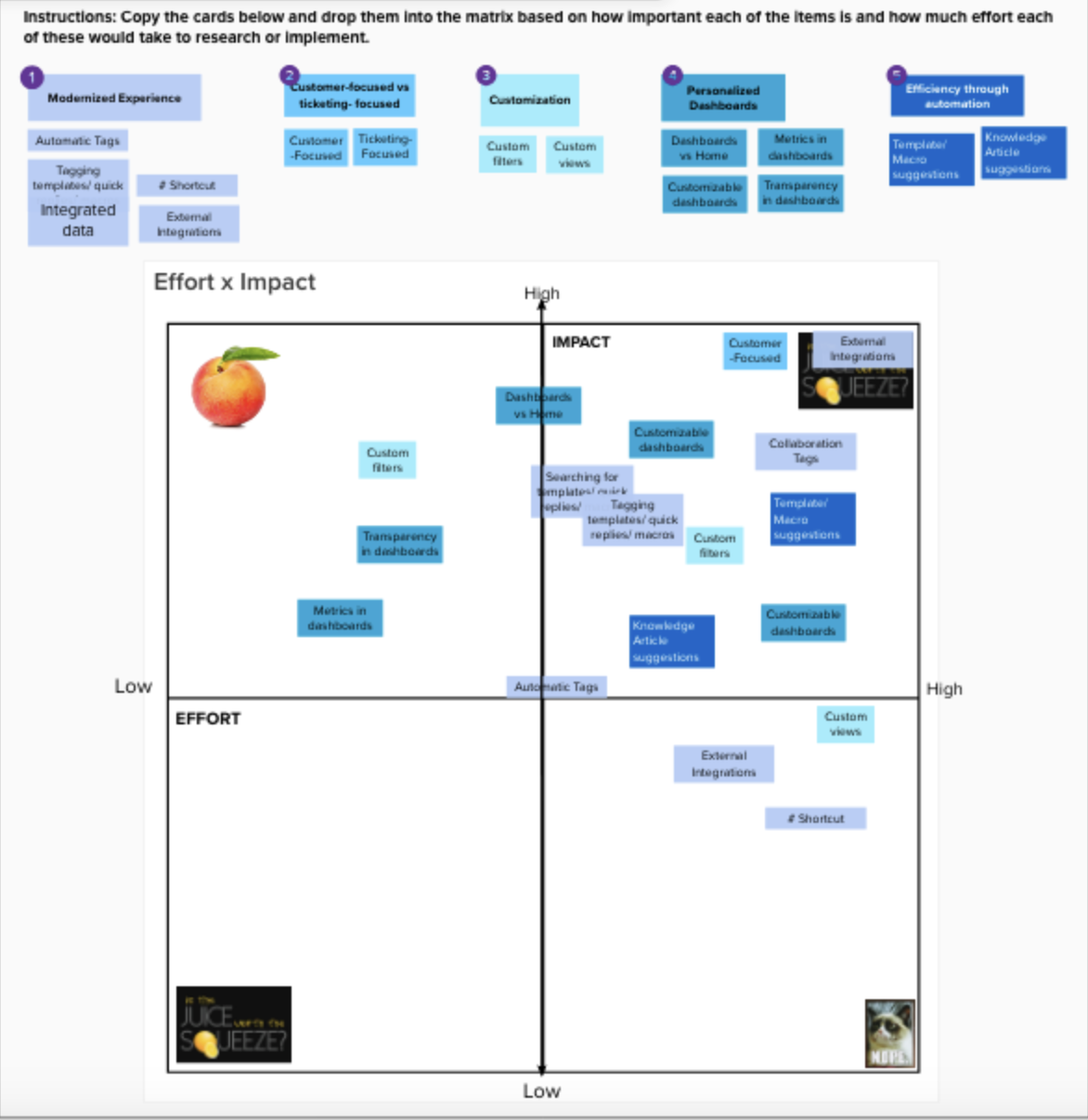

I prepared for the workshop by setting up another Mural board that provided an overview of the project and its objectives. Additionally, I incorporated interactive activities, including:

A warm-up exercise to acclimate the team to using Mural.

Brainstorming session to identify the core values of their product, focusing on its contributions to the agent experience.

Next Steps

Section of Mural board used in the Workshop

Findings Presentation

Section of Mural board used in the Workshop

I used the next portion of the workshop to present the study's findings in greater detail, offering the team insights beyond what was presented in the research report alone.

Section of Mural board used in the Workshop

Impact Matrix Exercise

The central focus of the workshop involved the team engaging in an effort Impact Matrix exercise. Following a detailed review of each feature, participants were tasked with placing them into the matrix according to their perceived importance and the anticipated effort required for research or implementation.

Section of Mural board used in the Workshop

Section of Mural board used in the Workshop

Participating in the workshop provided the team an opportunity to explore the value of their product, while the study findings prompted them to consider ways to enhance the product to enable agents to efficiently complete essential tasks. By identifying these features and understanding their usability challenges, the product team can implement enhancements that position them as a market leader, distinguishing them from their competitors.

Instead of solely receiving a report deck outlining the research findings, the product team had the chance to engage in discussions with the research team and collaboratively devise a plan for next steps. This opportunity to engage in discussions and collaborative planning with the research team enriches the product team's understanding, ultimately enhancing the likelihood of successful project outcomes.

Lastly, the team brainstormed next steps they could take in terms of research, design/ development, and how to collaborate with other internal teams.